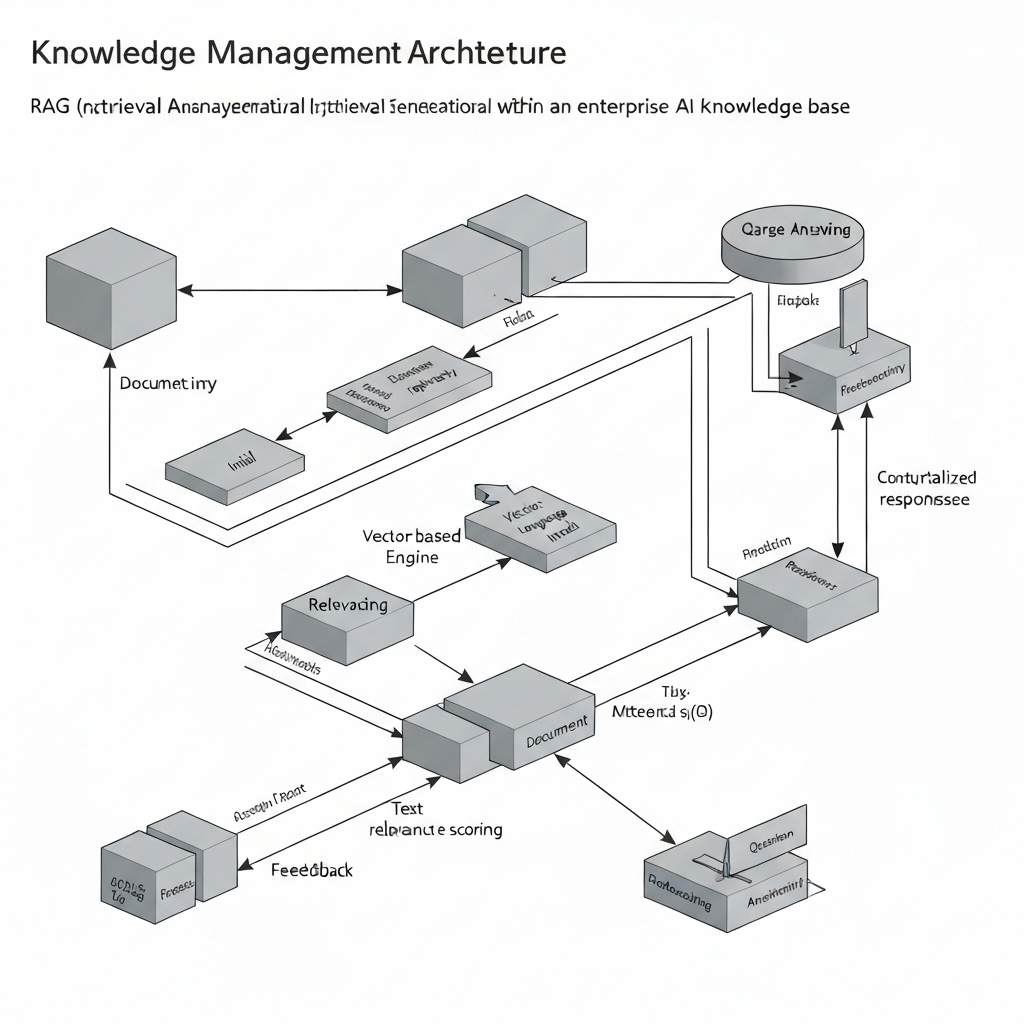

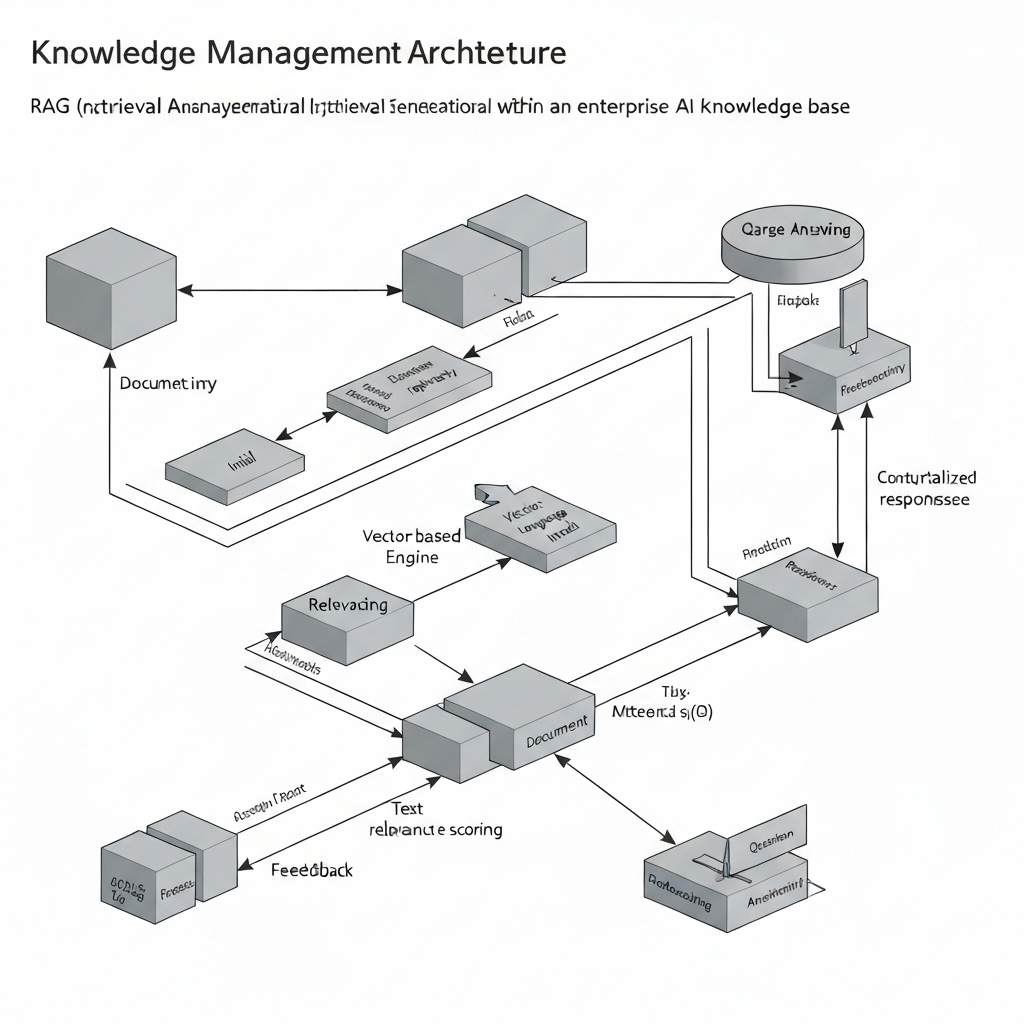

RAG Systems: Transforming Enterprise Knowledge Management

How Retrieval-Augmented Generation systems unlock organizational knowledge. Building custom RAG with LangChain, vector databases, and domain expertise.

Enterprise knowledge is often trapped in documents, databases, and people's heads. RAG (Retrieval-Augmented Generation) systems unlock this knowledge by combining the power of large language models with your organization's specific information, creating AI assistants that truly understand your business.

What Makes RAG Different

Unlike generic AI models, RAG systems can access and reason about your specific documents, policies, and procedures. They retrieve relevant information from your knowledge base and use it to generate accurate, contextual responses that reflect your organization's expertise.

Building Production RAG Systems

Successful RAG implementations require careful attention to document chunking, embedding quality, and retrieval strategies. We use LangChain for orchestration, vector databases like Pinecone or Weaviate for storage, and fine-tuned embedding models for domain-specific knowledge.

Real-World Applications

We've deployed RAG systems for technical documentation, compliance queries, and customer support. One manufacturing client reduced support ticket resolution time by 70% by giving their team instant access to 20 years of technical manuals and troubleshooting guides.

RAG systems represent the practical application of AI in enterprise knowledge management. They don't replace human expertise—they amplify it by making organizational knowledge instantly accessible and actionable.